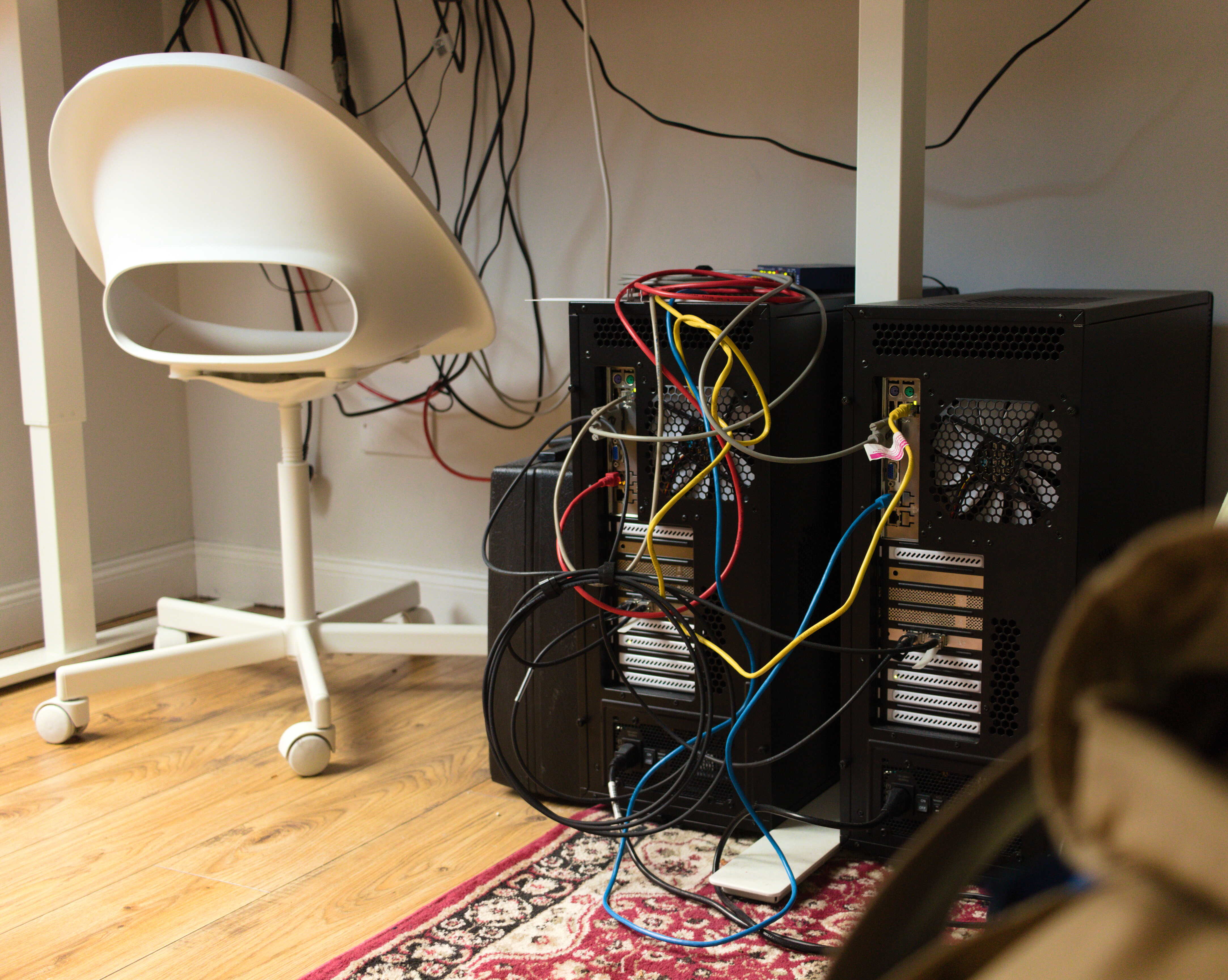

FreeBSD/Ubuntu Dual Boot Homelab in The Bedroom by the bed testbed

Current events have meant that my work place is now my home office, frustratingly this is also where I sleep. On one hand this has resulted in a very short commute, but on the other hand it does mean that I am living in close quarters with the computers I use for experiments, on the third hand (where did that come from?) it means that I get to have a testbed in the room where I keep my bed.

Of course this raises the serious question, if I write tests from my bed, which bed is the testbed? Unfortunately I only did one year of philosophy and so others will have to offer answers to this grand question.

I am in the unusual (for me) situation of needing to (well getting do, I love perf) do network performance tests on real hardware, thankfully my friend Tony was able to lend me two machines from his Bioinformatics cluster to play with for a couple of months.

This testbed exists to answer questions of the form:

"How does stock Ubuntu compare to FreeBSD?"

For these tests to be as fair as possible I need to have as identical hardware for the tests as possible. Tony enabled this by giving machines built out to the same spec, annoyingly his target wasn't "push packets as fast as possible", but was instead "give a reasonable mix of a ton of storage and compute to look at DNA sequences. Anything weird in these computers is clearly his fault and we are very greatful to get to experience them.

A dmesg from one of the boxes is here , but roughly they are:

- CPU: AMD Opteron(tm) Processor 6380 (2500.05-MHz K8-class CPU)

- 128GB RAM

- SuperMicro MNL-H8DGI6 motherboard

- A pair of SSDs on a PCI-e SATA controller

On top of that I added a pair of dual port 10GbE interfaces I found 'lying' around the labs. One is an Intel X520 82599ES and the other is a Mellanox ConnectX-3 Pro. These interfaces don't match and have different performance characteristics, this is fine for setting up the experiments, but I am going to replace them with a matched pair of single Interface 10Gb adapters for 'production' experiments..

My normal method for evaluating if a machine is fast is to build FreeBSD, they

managed a

buildworld buildkernel

in a respectable 58 minutes.

Setup

-left -right

+------------------+ 10.0.x.x +------------------+

| |.10.2 .10.1| |

ipmi | mlxen0+<---------------->+ix0 | ipmi

192.168.100.173 | | | | 192.168.100.167

| mlxen1+<---------------->+ix1 |

| | | |

| igb0 | | igb0 |

+-----------+------+ +------+-----------+

|___ ___|

freebsd 192.168.100.10 \_______ _______/ freebsd 192.168.100.20

linux 192.168.100.11 V V linux 192.168.100.21

+--------------+

| switch |

+--------------+

^

|

| freebsd 192.168.100.2

+---------+

| control |

| host |

+---------+

The two boxes are named with the suffixes '-left' and '-right' with the running OS setting the prefix, so we have freebsd-left, freebsd-right, ubuntu-left and ubuntu-right.

The machines have 3 network interfaces on the mother board, Dual Gigabit Intel Ethernet and an interface for IPMI. On each, one interface and the IPMI are connected to a switch which is in turn connected to the switch in the wireless router that bridges to the WiFi in my house. This setup is a little complicated, but because there isn't ethernet run up to my bedroom WiFi is the only sensible way to connect to the Internet. I'd much preferred a bit of NAT weirdness compared to having to set up WiFi in testbed machines.

I connected the serial ports on '-left' and '-right' to my control host, which is in the same switch domain as the hosts. I configured the SuperMicro motherboard to send the bios to the serial port.

I am really not using IPMI for all its abilities and instead it is a fancy remote power button I can press from the control host:

[control] $ ipmitool -I lanplus -H 192.168.100.173 -U ADMIN -P ADMIN chassis power on # power on -left

[control] $ ipmitool -I lanplus -H 192.168.100.167 -U ADMIN -P ADMIN chassis power on # power on -right

Serial Console

The serial ports are then connected to the control computer with an awesome two headed usb serial cable (I don't know what it is and would buy more if I did). The operating systems on -left and -right are configured to offer consoles over serial so I don't have to worry about breaking the network and locking myself out when I am far away.

On FreeBSD getting serial for loader and the system requires adding config to loader.conf and is documented in the FreeBSD handbook . Look at the bottom where it says "Setting a Faster Serial Port Speed" (I think the rest of the stuff on the page is out of date and rebuilding with a custom config is no longer required):

/boot/loader.conf:

boot_multicons="YES"

boot_serial="YES"

comconsole_speed="115200"

console="comconsole,vidconsole"

This configures loader to use both the video console and the serial console, tells it to use serial and sets the serial to the baud rate '115200' from the slow default of 9600. This baud rate matches between FreeBSD, Ubuntu and the BIOS so I don't have to reconfigure my serial terminal.

Getty (the thing that gives you login prompts) on FreeBSD is configured as

'onifconsole', so no further config is required. You can check this in

/etc/ttys

:

/etc/ttys:

...

# The 'dialup' keyword identifies dialin lines to login, fingerd etc.

ttyu0 "/usr/libexec/getty 3wire" vt100 onifconsole secure

ttyu1 "/usr/libexec/getty 3wire" vt100 onifconsole secure

ttyu2 "/usr/libexec/getty 3wire" vt100 onifconsole secure

ttyu3 "/usr/libexec/getty 3wire" vt100 onifconsole secure

Getting Serial for GRUB on Ubuntu (in 2021) requires adding the to

/etc/default/grub.d

and rebuilding the config file with

update-grub

, this

isn't really documented, but information can be found

in the grub

manual

and in a

selection

of

blogposts

. For grub serial we need to add:

/etc/default/grub.d

GRUB_TERMINAL="console serial"

GRUB_SERIAL_COMMAND="serial --speed=115200"

I am pretty sure the grub.d that ships with ubuntu is out of date with the

actual file, when I rebuilt the menu timeout broke, it went from the default 10

seconds to the 0 seconds in the

grub.d

that I edited. I didn't care enough to

file a bug report, this was a lot of faff.

To get console message from the Linux kernel you need to change the flags

passed to the kernel when it is booted, you can do this too from

grub.d

:

/etc/default/grub.d:

GRUB_CMDLINE_LINUX_DEFAULT="console=ttyS0,115200 console=tty0"

This tells the Linux kernel to use ttyS0 (com0) as the console, configures the baud rate to '115200' and tells the kernel to use tty0 as the console. After a few messages the kernel will hand over to something else that will ignore the console config if you haven't also configured systemd to offer a console.

To configure systemd to use a console you need to create a services file and it will handle the magic for you. This is documented on the Ubuntu wiki, but everything there is wrong. Instead the systemd versions are available in blog posts you can find online. I found the best results following documentation for a different distro targeting the Raspberry Pi 3 .

# systemctl enable serial-getty@ttyS0.service

# systemctl start serial-getty@ttyS0.service

You should now have a working getty on serial, but I think you then need to kick something else, I could only get this to work by rebooting.

Booting

To do comparisons I need to be able to boot both Operating Systems and manage them remotely. Dual boot of some sort means that I can dig into differences on the two the platforms quickly and get answers from the running systems.

Dual booting the machines turned out to be a lot harder than I expected. When I got the bios output working on serial I thought I was on to a winner, but that pesky SATA controller doesn't play well with the BIOS boot menu. Only the first drive in the SATA controller pair appears in the boot selector leaving me plumb out of luck.

Instead I dove into the Linux world. Knowing that grub knows how to boot FreeBSD I went with using grub to get a boot menu that I can control from the serial port. ( side note: I know that grub is a multiboot compatible boot loader, meaning that it will boot anything that matches that spec. I think it is also multiboot compatible and can be chained, i.e. grub can boot grub. If that is the case then FreeBSD's loader is also multiboot compatible and the FreeBSD kernel is probably too, can loader then boot grub? It will take a truly brave person to figure this particular puzzle out. )

After installing FreeBSD to the second SSD in the SATA controller. I got

messages about a FreeBSD install being detected when I ran

update-grub

. I

think these were just for fun though, I didn't get any new menu entries when I

test rebooted. I installed FreeBSD by pulling the drive I installed Ubuntu to,

booting a FreeBSD USB installer and installing to the only drive (thanks hot

swap bay!).

Configuring grub to boot FreeBSD requires adding an entry to one of the extra

config files. Internet searching suggested

/etc/grub.d/40_custom

which I

filled out with:

/etc/grub.d/40_custom:

#!/bin/sh

exec tail -n +3 $0

# This file provides an easy way to add custom menu entries. Simply type the

# menu entries you want to add after this comment. Be careful not to change

# the 'exec tail' line above.

menuentry "FreeBSD 13.0" {

set root=(hd1)

chainloader +1

}

A Unix StackExchange Answer helped me figure out the rough grub commands to use and I tried them out on the grub command line (press 'c' from the menu).

The final grub config looks like this (

notice that default grub is friendly

and doesn't beep by default

), with above

/etc/grub.d/40_custom

:

/etc/default/grub:

# If you change this file, run 'update-grub' afterwards to update

# /boot/grub/grub.cfg.

# For full documentation of the options in this file, see:

# info -f grub -n 'Simple configuration'

GRUB_DEFAULT=0

GRUB_TIMEOUT_STYLE=menu

GRUB_TIMEOUT=-1 # pause at bootloader menu

GRUB_DISTRIBUTOR=lsb_release -i -s 2> /dev/null || echo Debian

GRUB_CMDLINE_LINUX_DEFAULT="console=ttyS0,115200 console=tty0"

GRUB_CMDLINE_LINUX=""

# Uncomment to enable BadRAM filtering, modify to suit your needs

# This works with Linux (no patch required) and with any kernel that obtains

# the memory map information from GRUB (GNU Mach, kernel of FreeBSD ...)

#GRUB_BADRAM="0x01234567,0xfefefefe,0x89abcdef,0xefefefef"

# Uncomment to disable graphical terminal (grub-pc only)

#GRUB_TERMINAL=console

GRUB_TERMINAL="console serial"

GRUB_SERIAL_COMMAND="serial --speed=115200"

# The resolution used on graphical terminal

# note that you can use only modes which your graphic card supports via VBE

# you can see them in real GRUB with the command `vbeinfo'

#GRUB_GFXMODE=640x480

# Uncomment if you don't want GRUB to pass "root=UUID=xxx" parameter to Linux

#GRUB_DISABLE_LINUX_UUID=true

# Uncomment to disable generation of recovery mode menu entries

#GRUB_DISABLE_RECOVERY="true"

# Uncomment to get a beep at grub start

#GRUB_INIT_TUNE="480 440 1"

Baseline Measurements

Before running more enjoyable experiments it is a requirement to get baseline measurements for what the systems can do. I think I need a bit more of a test framework for network performance tests, I want to sample memory usage, CPU usage and get flame graphs for tests, but for starters it is good to get raw iperf3 numbers.

For each configuration, I ran forward and backward iperf3 tests with UDP and TCP. I let iperf3 run in its default 10 seconds measurement mode, for UDP I requested it try infinite bandwidth (-b 0).

For each case I ran iperf3 as a server on

*-right

and the client on

*-left

.

Remember for these that by default the client iperf3 process sends and the

server receives, this is swapped with the

-R

flag.

freebsd-left -> freebsd-right (server)

tcp iperf3 -c 10.0.10.1

[ 5] 0.00-10.00 sec 6.58 GBytes 5.66 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 6.58 GBytes 5.65 Gbits/sec receiver

tcp iperf3 -c 10.0.10.1 -R

[ 5] 0.00-10.00 sec 8.34 GBytes 7.16 Gbits/sec 2485 sender

[ 5] 0.00-10.00 sec 8.34 GBytes 7.16 Gbits/sec receiver

udp iperf3 -c 10.0.10.1 -u -b 0

[ 5] 0.00-10.00 sec 3.63 GBytes 3.11 Gbits/sec 0.000 ms 0/2666610 (0%) sender

[ 5] 0.00-10.00 sec 2.03 GBytes 1.74 Gbits/sec 0.006 ms 1173881/2666555 (44%) receiver

udp iperf3 -c 10.0.10.1 -u -b 0 -R

[ 5] 0.00-10.00 sec 3.24 GBytes 2.79 Gbits/sec 0.000 ms 0/2384960 (0%) sender

[ 5] 0.00-10.00 sec 1.91 GBytes 1.64 Gbits/sec 0.003 ms 977341/2384881 (41%) receiver

We run baselines so we can understand what future measurements show. Care has to be take that things are actually fair.

FreeBSD -> FreeBSD

on the same hardware is a fair test, but it isn't what we

have here. When we compare the forward and reverse modes for the iperf3

measurement we see that when the

freebsd-left

is the sender for TCP we get a

much lower through put than when

freebsd-right

is the sender. My guess is

that this is the difference between the offload engines in the Intel and

Mellanox cards.

FreeBSD -> FreeBSD

for UDP has interesting results.

freebsd-left

with the

Mellanox card is able to sink more packets into the network than

freebsd-right

with Intel. Annoyingly these are opposite to the TCP results, where

freebsd-right

can send more.

This might already be highlighting an interesting place to dig, and it is where I would look next, IF I were comparing network interfaces.

ubuntu-left -> ubuntu-right (server)

tcp iperf3 -c 10.0.10.1

[ 5] 0.00-10.00 sec 9.59 GBytes 8.24 Gbits/sec 823 sender

[ 5] 0.00-10.00 sec 9.59 GBytes 8.23 Gbits/sec receiver

tcp iperf3 -c 10.0.10.1 -R

[ 5] 0.00-10.00 sec 11.0 GBytes 9.41 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 11.0 GBytes 9.41 Gbits/sec receiver

udp iperf3 -c 10.0.10.1 -u -b 0

[ 5] 0.00-10.00 sec 2.09 GBytes 1.79 Gbits/sec 0.000 ms 0/1546210 (0%) sender

[ 5] 0.00-10.00 sec 1.50 GBytes 1.29 Gbits/sec 0.006 ms 436284/1546104 (28%) receiver

udp iperf3 -c 10.0.10.1 -u -b 0 -R

[ 5] 0.00-10.00 sec 2.08 GBytes 1.79 Gbits/sec 0.000 ms 0/1544000 (0%) sender

[ 5] 0.00-10.00 sec 1.12 GBytes 965 Mbits/sec 0.015 ms 710878/1543876 (46%) receiver

Next up Ubuntu -> Ubuntu. When looking at later measurements we need an idea of where changes come from isolating out variables is a good thing to do.

Again with the TCP tests we see a difference in performance between the two systems, for Ubuntu -> Ubuntu it is approximately 1.2 Gbit/s, whereas for FreeBSD it is around 1.5Gbit/s, but FreeBSD has a lower baseline for comparison.

Next up for UDP with Ubuntu -> Ubuntu we see something really weird, for the

ubuntu-left

sender and the

ubuntu-right

sender the packets we try to send

are much lower than the FreeBSD hosts. This correlates with lower overall

throughput in both tests, but almost half the performance for

ubuntu-right

looks really weird.

I have a hunch that the lower sending rate is related to better pacing

interactions between iperf3 and the Linux kernel. I have no idea why the

received rate is so low for

ubuntu-right

.

freebsd-left -> ubuntu-right (server)

tcp iperf3 -c 10.0.10.1

[ 5] 0.00-10.00 sec 10.7 GBytes 9.19 Gbits/sec 1720 sender

[ 5] 0.00-10.22 sec 10.7 GBytes 8.98 Gbits/sec receiver

tcp iperf3 -c 10.0.10.1 -R

[ 5] 0.00-10.22 sec 8.75 GBytes 7.35 Gbits/sec 1464 sender

[ 5] 0.00-10.00 sec 8.75 GBytes 7.52 Gbits/sec receiver

udp iperf3 -c 10.0.10.1 -u -b 0

[ 5] 0.00-10.00 sec 3.63 GBytes 3.12 Gbits/sec 0.000 ms 0/2667830 (0%) sender

[ 5] 0.00-10.04 sec 1.29 GBytes 1.11 Gbits/sec 0.011 ms 1717151/2667664 (64%) receiver

udp iperf3 -c 10.0.10.1 -u -b 0 -R

[ 5] 0.00-10.04 sec 2.07 GBytes 1.77 Gbits/sec 0.000 ms 0/1524220 (0%) sender

[ 5] 0.00-10.00 sec 1.90 GBytes 1.63 Gbits/sec 0.003 ms 125112/1524149 (8.2%) receiver

Finally we get to run it all again with both operating systems in play. If everything was optimal (and we therefore had no work to do) there would be no difference in their performance, but we already know that this isn't true.

Running the tests with differing operating systems gives us an opportunity to see if the receiver side of the test has an impact (rather than just the sender). We can do this by pair the faster side with the slower side.

For now though, I think the different network cards are introducing too much variation between the systems. The numbers differ here and that on its own is quite interesting, but there seem to be too many choices for why. I find the Ubuntu -> Ubuntu reverse test halving the rate very suspicious and want to run the tests again.

The variation in the network cards is actually too much for me, I think it is a red flag in the measurements and would only encourage stupid review comments. This annoyed me enough that I bought a pair of Single Port Mellanox ConnectX-3 EN PCIe 10GbE to evaluate before running any meaningful experiments.

These systems are up and running, even with the questions that the baselines raised they are functional enough to start developing the interesting parts of the experiments and writing enough automation glue to rule out me making mistakes. I can then return, rerun the automated experiments and get better numbers.